Creating a product is like raising a child — you want all the best for them. And when you have a demanding project ahead, you want to perform exceptionally well.

When the flespi team released own MQTT broker at the end of 2017, another part of Gurtam — Development Center — responsible for the flagship Wialon Hosting platform — started using it in the ongoing product developments.

Wialon Hosting located in the Wialon Data Center has over 800 000 connected GPS devices sending telemetry information to servers a few times per minute. This results in up to 20 000 new messages per second with the size of each message in JSON representation of about 300 bytes.

The idea was to publish all these messages to the MQTT broker and consume them from various locations and for various needs.

Testing the MQTT broker

First, we checked the broker itself to see if it’s capable of continuously devouring such data flow and find the best C++ client implementation providing the highest throughput.

It took us a month to test it in various conditions at 4-10 times higher load than the actual numbers. So far our MQTT broker can process 200 000 of 300-byte messages per second with no visible impact on the CPU, I/O or memory on the servers. Of course, we optimized it for such kind of load.

Next, we needed to feed the entire data flow to the broker. 20 000 incoming messages per second. JSON payload. 300 bytes each. We wanted to devise some business logic for messages processing, e.g., detect messages with LBS information and maintain up-to-date LBS base stations map. Or store messages in backup storage. We can’t specify what we want in an MQTT message topic so that the broker can take care of the load and deliver only the messages we want.

Initially, we just took Python with a standard paho library... and it sucked. We exhausted the CPU of the modern Xeon-based server but couldn’t process all traffic.

Benchmarking the MQTT + JSON stacks

This small failure urged us to benchmark popular implementations of MQTT+JSON stacks and our implementation of MQTT. We benchmarked with the task in question — from all messages extract only those that have LBS information and publish its back to MQTT broker with a different topic.

Each message delivered to the broker had a standard Wialon message JSON format like this:

{"id":160714,"msg":{"t":1516779384,"f":1073741827,"tp":"ud","pos":{"y":59.9377466,"x":30.450435,"z":3.2,"s":21,"c":240,"sc":10},"i":0,"lc":0,"p":{"hdop":0.8,"io_caused":7,"gsm_signal":17,"fuel_lvl":0,"current_profile":1,"pcb_temp":31,"movement_sens":0,"battery":4033,"power":25458,"battery_current":1,"can_rpm":0,"adc1":0,"adc2":0,"can_fuel_used":0,"can_distance":0,"odometer":400507371,"gsm_operator":25001}}}

Our job was to detect messages with the ‘mnc,’ ‘mcc,’ ‘lac,’ and ‘cell_id’ values in the ‘p’ parameter and deliver them to another topic via MQTT.

We tested the Python 3 code with the next MQTT clients: aiomqtt, hbmqtt, paho and gmqtt with few events loops and also tried an alternative python implementation called pypy.

We could stop there with just Python implementation and libraries benchmark, but we were curious about the wider picture. So we added the flespi implementation of the MQTT client (kibo-c) written on pure C to the candidate list. We also created a similar Lua script implementing the business logic (kibo-lua), but MQTT and JSON base on our C library. We also tried Go language with paho library — just for fun.

All source codes are available for download, see the links in the table below. You just need to reproduce the setup with own message publisher and replace ‘XXXXX’ with a correct flespi token.

Side note: We discovered that our commercial plan traffic limitations are OK to handle this massive load, but with the free plan, you might hit the limits at 10 000 messages/second.

Testing environment: We ran the benchmarking at 1 core of Intel(R) Xeon(R) Silver 4114 CPU @ 2.20GHz, Turbo Boost — 3GHz with 132GB of RAM.

We benchmarked two cases:

entire workflow: receive MQTT => parse JSON => detect LBS => publish back to MQTT;

limited workflow: to test pure MQTT library and not depend on the JSON parser implementation — just receive MQTT messages.

As a result, we also presented a so-called CPU score for each scenario that reflects how many messages the CPU can handle. Bigger values mean better implementation.

Benchmarking results

Here are the scores we've got for CPU and RAM usage across all tested MQTT + JSON implementations.

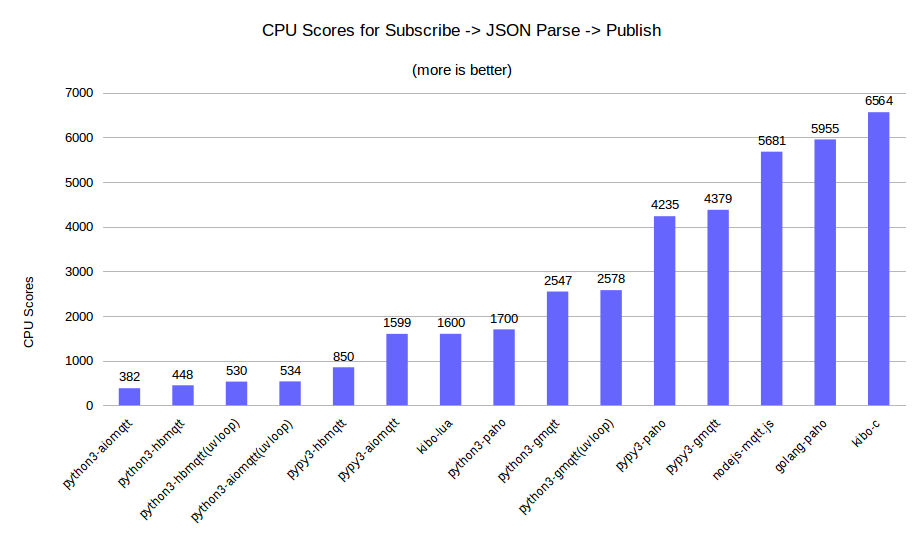

Entire workflow (Subscribe -> JSON Parse -> Publish)

| Language | CPU Load, % | Msgs/sec | RAM, MB | CPU Scores |

| python3-aiomqtt | 119% | 4550 | 20 | 382 |

| python3-hbmqtt | 100% | 4475 | 26 | 448 |

| python3-hbmqtt(uvloop) | 100% | 5300 | 28 | 530 |

| python3-aiomqtt(uvloop) | 119% | 6350 | 21 | 534 |

| pypy3-hbmqtt | 100% | 8500 | 132 | 850 |

| pypy3-aiomqtt | 101% | 16150 | 121 | 1599 |

| kibo-lua | 100% | 16000 | 6,5 | 1600 |

| python3-paho | 100% | 17000 | 16 | 1700 |

| python3-gmqtt | 96% | 24448 | 18 | 2547 |

| python3-gmqtt(uvloop) | 98% | 25268 | 21 | 2578 |

| pypy3-paho | 68% | 28800 | 109 | 4235 |

| pypy3-gmqtt | 58% | 25397 | 125 | 4379 |

| nodejs-mqtt.js | 47% | 26700 | 100 | 5681 |

| golang-paho | 44% | 26200 | 10,9 | 5955 |

| kibo-c | 39% | 25600 | 4,4 | 6564 |

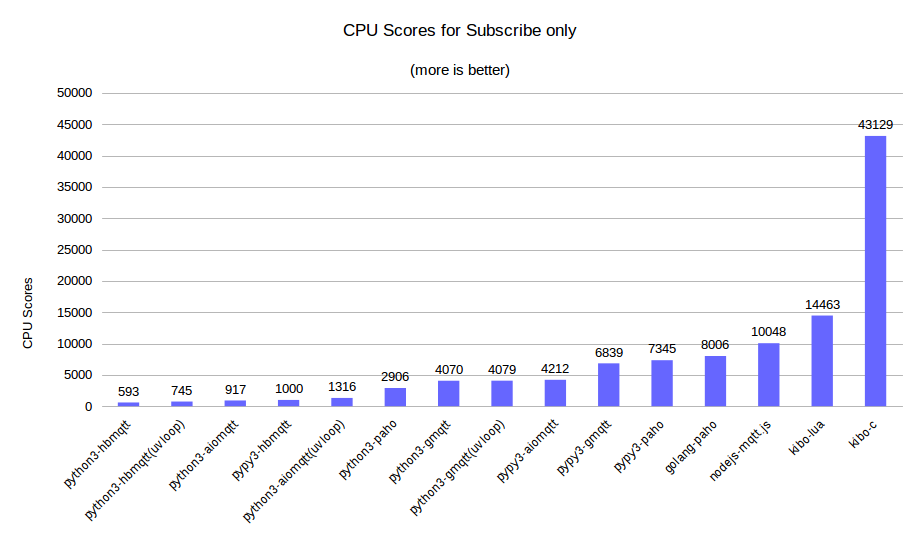

Limited workflow (Subscribe only)

| Language | CPU Load, % | Msgs/sec | CPU Scores |

| python3-hbmqtt | 100% | 5 925 | 593 |

| python3-hbmqtt(uvloop) | 100% | 7 450 | 745 |

| python3-aiomqtt | 126% | 11 555 | 917 |

| pypy3-hbmqtt | 100% | 10 000 | 1000 |

| python3-aiomqtt(uvloop) | 134% | 17 635 | 1316 |

| python3-paho | 91% | 26 445 | 2906 |

| python3-gmqtt | 60% | 24 417 | 4070 |

| python3-gmqtt(uvloop) | 61% | 24 884 | 4079 |

| pypy3-aiomqtt | 57% | 24 009 | 4212 |

| pypy3-gmqtt | 37% | 25 303 | 6839 |

| pypy3-paho | 36% | 26 443 | 7345 |

| golang-paho | 30% | 24 017 | 8006 |

| nodejs-mqtt.js | 24% | 24 114 | 10048 |

| kibo-lua | 18% | 26 033 | 14463 |

| kibo-c | 5,6% | 24 152 | 43129 |

Benchmarking takeaways:

Implementation of JSON manipulation is at least as important as implementation of the MQTT library. Sometimes it even higher. NodeJS has one of the best JSON implementations, that’s why it is quite fast.

Python implementations of the MQTT client in hugely vary in performance. Here’s the list from the fastest to slowest: paho, gmqtt, aiomqtt, hbmqtt.

Pypy helps a lot. It allows Python to run twice faster.

uvloop is not as fast as it declares, but it is indeed fast especially on high I/O load.

Pure C is way ahead of competitors. As expected.

Lua scripting language is quite fast. It outperforms Python and NodeJS in the pure MQTT test.

***

When we were running this benchmark, we introduced shared subscriptions feature to our MQTT broker allowing to balance the load between multiple processes. It means that we do not need to fit into 100% of a single CPU core. Now it is the matter of money we want to spend on hardware. From DevOps perspective, it is better to select an implementation that is easier to manage, modify and supply with libraries to cover all possible tasks for a business logic application.