Each day the world invents new technologies for software developers, but few of them address complex architectural problems. Today I will be talking about the one that does.

Problem: session-based application overload

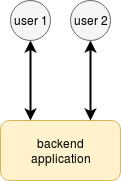

Imagine you have an app that has a backend and frontend parts. It’s a long-run application — users need to authorize to start using it. It means that the backend will create a session for the user, receive the user commands (usually via REST API), and generate events for the frontend to handle.

This is a standard event-driven session-based web application very popular in fleet management and GPS tracking systems.

No matter what programming language the backend relies on and what framework the frontend uses to render the layout, the application workflow is simple:

Create a session for the user on the backend and transmit the session access information to the frontend.

Push events from various components into the session.

Read and process session events on the frontend.

The above steps are easy to implement when your server process is standalone and represents 100% of your backend. It will generate events and deliver them directly to web applications via HTTP or WebSockets.

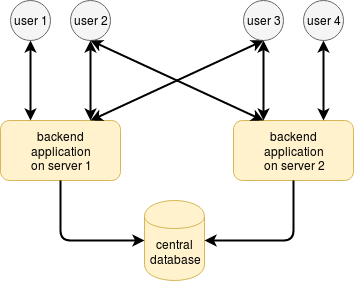

The difficulty level rises when you decide to load balance between two applications on the backend, sometimes between multiple servers. This need will naturally occur once High Availability looms on the agenda or when resources used by your application exceed the capacity of one server. In such case, you will need an additional centralized component on your backend — usually a database — that will retain sessions and application state across multiple web servers.

Things go worse if the users apply REST API and do not know to which web-server they need to connect to, especially if web-servers are behind a load-balancer or reverse proxy-server like nginx. You either need to implement the logic on this load-balancer to forward requests from the same user/session to the same web-server, or maintain the entire session data in the database.

Advanced web applications usually solve this problem by introducing session clusters available via unique URL. This is a so-called cloud system with regional access points.

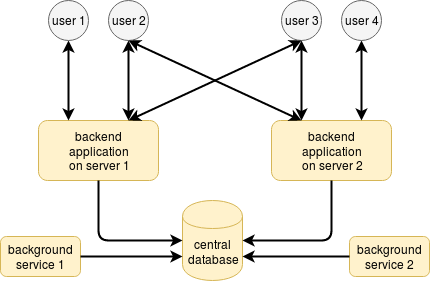

The problem worsens when you need to introduce separate components — microservices — responsible for a specific part of workload processing. They also need to deliver events to users usually via a database.

I consider this a dead-end approach — at some point in time, the system will be overloaded. The trouble will first touch the central database since it handles the most load.

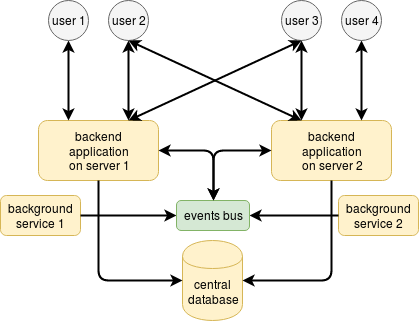

Solution: distributed event-driven system

Yes, a distributed event-driven system features a bus that accumulates events from and delivers events to all your backend applications. The user connects to the subset of events according to their needs.

Implementation for internal use, like ours, is easy — you usually end up with either Kafka or MQTT broker depending on what you prefer to administer. But what if you need to connect external users to the events bus? One approach is to develop an HTTP proxy adapter that will check access according to your users ACL and filter inappropriate events and requests:

What I don’t like about this approach is the system complexity. It’s massive. You begin to depend on so many components that provision of High Availability becomes difficult. In such situation, most systems migrate to the cloud — AWS, Google Cloud or MS Azure — and rely on these clouds for administration. That’s not perfect either — first, because it’s expensive, and second, because it’s not flexible and may be more complicated than you expect. And once you started to build your system architecture in the cloud, the switching cost rises so high, that you have no way back.

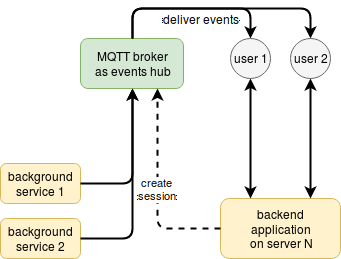

I have a better approach to implementing an event delivery system for web users. You concentrate on your business logic and rely on events delivery to MQTT broker, which is securely accessible from all your services and by users:

The concept of flespi MQTT broker is simple:

When a user authorizes on the backend application, the application uses HTTP REST call to create a special access token for the user with the filled ACL section which allows access only to a limited set of events.

The user receives this token in a web browser and establishes own client connection to the MQTT broker using MQTT over WebSockets.

Background services or backend applications generate events and publish them to the MQTT broker.

MQTT broker delivers to the user only the events that meet MQTT ACL specified in the token; all other events get filtered out.

Once a session closes or ACL for the user changes, the backend application can update or delete the token or the user’s MQTT session using HTTP REST call to the MQTT broker.

Example: MQTT-based architecture

Let’s see a more detailed example using flespi MQTT broker with flexible ACL feature. Say, you have a specific service receiving telemetry data from various sensors. You need to process this data, put it in stable storage, and deliver to users of a mobile app or web-browser.

Your users can create and register multiple sensors in your system, but it is important that each user receives the data only from sensors they created. Each user has a unique ‘user_id’, and each sensor has a unique ‘sensor_id’.

The central database will have three tables: users, sensors, and sessions. The background service receiving data from sensors will use a special server-side token able to publish to all topics and connect to the MQTT broker. Once connected it will transparently publish messages received from the sensors into respective MQTT topics in the format ‘data/{user_id}/{sensor_id}’.

The service responsible for storing all sensor data in persistent storage will subscribe to the topic ‘data/#’ with ‘clean-session=false’ flag, receive all messages from all sensors, and store them accordingly. When the storage disconnects or reboots, the MQTT broker will accumulate messages and deliver them to the service as soon as it reconnects.

Your backend application will use a special token with an extra flag ‘supertoken’ indicating its ability to create other tokens and manage their ACLs. Users will authorize in your backend application; it will create a session for them in the local database, make a REST call to the flespi platform, and create a token with granted subscription access to ‘data/{user_id}/#’. The user will get this token in web-browser in response to authorization.

A user on the web-browser, using Javascript and any of mqtt.js or similar libraries will use this token to open a WebSocket connection to MQTT broker and subscribe either to all events ‘#’ or specific sensors only: ‘data/+/{sensor_id}’.

Once your background service publishes new messages from the target sensors to the MQTT broker, they immediately appear in the app for those users that have access to these sensors. Usually, it takes less than a second to have them in your javascript.

When the backend application closes a session, it can easily delete the token or MQTT sessions opened by the user via a REST call. Alternatively, a ‘ttl’ value can be used to automatically delete the token once not used for a specified time.

The implementation of such system is compact and straightforward. You can place various components of your applications in various datacenters and ensure security via SSL and flespi MQTT broker.

Using MQTT and persistent (non-clean) sessions you can have stable events storage for users even when they are disconnected. This is suitable for chat applications, alarm processing, etc. The approach is very flexible and simple, works out of the box with minimal efforts, is free for most use cases, and, yes, this is one of the most advanced technologies at the moment, so it’s worth looking at when engineering your next system.