In December 2025 our monthly uptime was 100% – great stability with zero incidents reported. However, if we look at the combined annual uptime figure – 99.9612% – this turns out to be the worst year in flespi history.

The reasons are clearly visible on the monthly uptime bars – in April 2025, we experienced a blackout in one of our data centers, and in July 2025, we lost power on a critical network segment.

The first data center incident was described and analyzed in detail in a dedicated article. What I did not expect is that this article would become our second bestseller of the year, gathering an order of magnitude more likes than almost all other blog posts, except one – the gen4 codi architecture overview.

Interestingly, both of these highly liked articles, despite being on very different topics, had something in common – a deep technical dive and detailed insights shared openly. So if you missed them, take a look, it might be an exciting way to peek inside flespi.

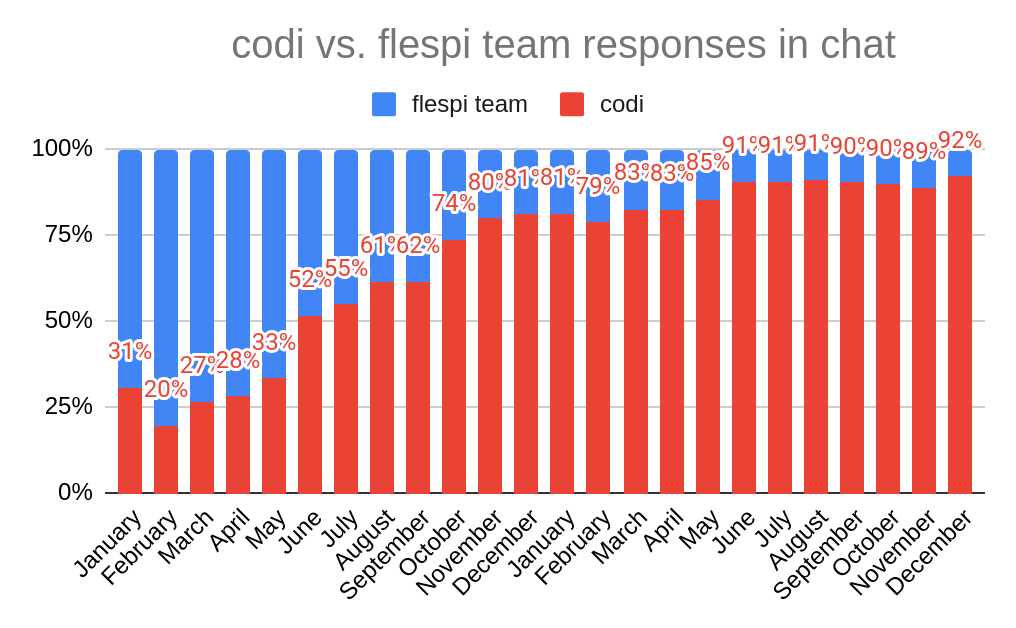

To be honest, I should also mention the third most liked article – it was an ordinary February 2025 changelog. I’m not entirely sure what exactly drove the engagement there – the note about the U.S. losing its position as a reliable ally or some technical details around codi. But both topics have evolved significantly since February and have now stabilized at some level. I won’t touch on the U.S. topic today, but as for codi – it has grown and settled at handling around 90% of our support communication:

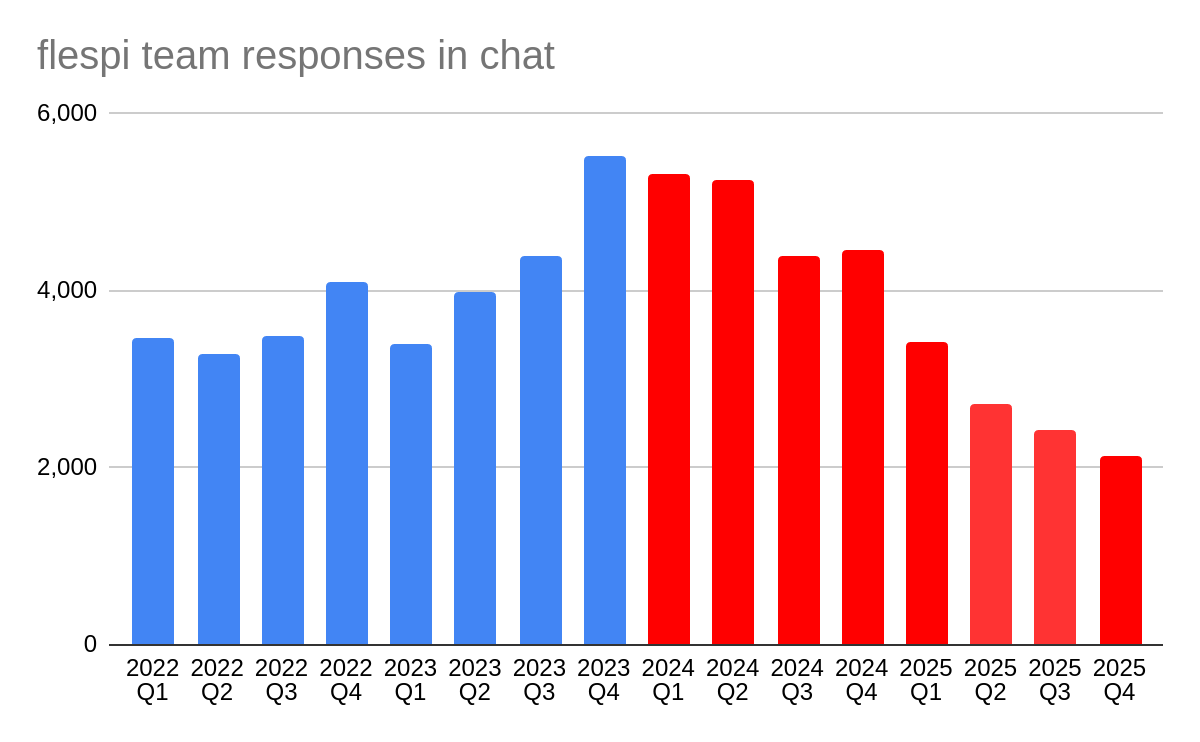

However, the most important point is not the sheer number of AI responses, but the fact that, despite a rapidly growing user base and increasing complexity of the telematics projects we’re involved in, the actual load on the human part of the team has significantly decreased. On the chart below, you can see how the introduction of AI affected the number of messages sent by human engineers:

It is now 2.5x lower compared to the end of 2023. And given the expected trend, without codi, this number could have grown 4x–5x compared to today. So AI is clearly working for us in support, and now we’re extending this approach to the engineering part of our work. We also plan to publish a series of articles on this topic, with the first one already scheduled for January 2026.

As part of our year-end results, we reached 1.5 million registered devices and now have 590 companies using flespi in their solutions. On September 16, 2024, we entered the one-million-devices club and are now moving towards the next major milestone – 10 million devices. The first 500K device registrations were reached in September 2022. It took us 24 months to connect the next 500K devices and reach the first million, and then 15 months to connect another 500K. My estimation is that we can reach 2 million devices within the next 12 months – roughly by the very end of 2026, assuming everything goes well.

Regarding December, we were mostly focused on internal work – improving various platform components and preparing for higher throughput as the load grows.

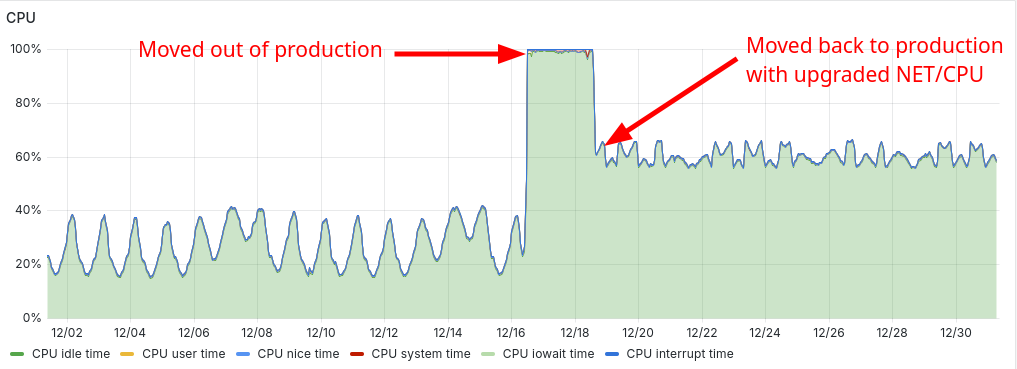

It took quite some time to order, set up, and install everything, but finally, we were able to address the second incident of this year and dissolve the critical network segment by upgrading uplinks with bonded interfaces. With this change, a similar failure should now be a non-event for us. This was achieved through CPU and networking hardware upgrades on our data center routers, which also resulted in a noticeable reduction in packet routing load:

We are also in the process of installing a dedicated set of routers to handle inter-data-center traffic independently, which will give us additional capacity and further reduce the load.

We published the roadmap for the upcoming year and shared our outlook on the telematics market. AI is getting a lot of attention worldwide, and looking at our results, it really deserves this attention right now. However, at some point, when everyone squeezes everything possible out of AI, the focus will likely return to more fundamental topics. Or at least I hope so. Maybe the world is changing fast, but people are not changing that quickly.

Internally, we also have ongoing debates between “AI is evil and fake” and “Everything in the future will be made by AI”. Given that our team mostly consists of deeply involved lead-level engineers with a lot of legacy knowledge, you can imagine how intense these discussions can get. As usual, the truth is somewhere in the middle.

Among other things currently in development is an enhancement of device commands with priority, condition, and max_attempts options. This will significantly simplify implementation for many scenarios that currently require more advanced application-level logic. For example, to safely block an engine, it will be possible to set a position.speed==0 condition, and the command will be sent to the device only when its speed is zero. Similar to plugins and streams, conditions will also allow checking geofences where the device is located and its metadata. We plan to release this functionality in early January.

As a simpler alternative to WebRTC for real-time video streaming, we are now also exploring HTTP-FLV technology. Although FLV is relatively old and no longer very popular, its usage allows us to deliver video streams to users with a latency of less than one second. Our infrastructure and software components are already fully prepared for HTTP-FLV, so we have a good chance to release this feature this winter.

We also have an actively developed plugin for extracting structured information from .ddd tachograph files directly inside flespi. In addition, we equipped codi with deep knowledge of tachograph regulations and data structures based on official CELEX documents. This is done both to simplify our own development and to provide users with a powerful tachograph consulting tool that combines CELEX documentation, telematics devices, real data from connected tachographs, and, of course, a large amount of accumulated user experience. This makes codi a truly unique tool whose tacho expertise goes far beyond that of any individual human specialist. You are welcome to ask even the most complex tachograph-related questions now.

And as I already mentioned, we’ve done a lot of internal refactoring work to adapt the platform for AI-driven engineering. As a result, some protocol-related engineering tasks are now processed through a dedicated AI tool:

The AI tool used for protocol engineering results in two very different implementation timelines. If everything goes smoothly – the protocol knowledge is already imported into the AI knowledge base, and the AI engineer performs its task correctly (which currently happens in about 85% of cases) – the work is usually completed in less than an hour, often within minutes. At this stage, most of the latency comes from human engineers validating the implementation, deploying it, and communicating the results.

If there were some issues during implementation, we run the tool over and over, improving its operation while the environment is not changed (e.g., the fix is not yet committed). In such cases, protocol work may take several days or even up to a week. However, by the end of this process, the AI protocol engineering tool becomes better adapted for similar tasks in the future.

To make AI-based engineering truly effective, we need to adapt our platform, internal tools, and processes. Given the amount of legacy accumulated over our eight years of history, this involves many small but very focused changes. We started with protocol engineering to ensure high-quality device protocol integrations. I also expect that device manufacturers will start releasing new device versions at a much faster pace, partly driven by AI. Next, we plan to introduce AI into plugins and streams engineering to speed up integrations between flespi and other systems.

This increased engineering pace also requires much faster documentation, which naturally drives AI adoption across our website and platform documentation. With each iteration, we move faster and faster, which in turn pushes us to further improve the capacity and stability of our infrastructure. It may look like an endless loop, but I believe we can keep it under control.

The year 2026 promises to be tough. Hopefully, we – not only flespi, but humanity as a whole – will manage to stop at least a few wars and avoid starting new ones. Have a great year and stay tuned!