Until now, AWS IoT Hub has been a native way to send flespi device messages to AWS. This article shows an alternative way with higher throughput.

Disclaimer: the article is written with the help of ChatGPT.

Current approach limitations

If you type “flespi to AWS” in any search engine, the first link you see will be this article. For years we’ve been recommending our users to utilize AWS IoT Hub to consume parsed data from GPS trackers into the AWS system. But at some point in time (as our users were connecting more and more devices to the AWS IoT stream) we faced a throughput limit: 6000 messages per minute, which can’t be extended.

Explanation of the AWS limitation combined with flespi stream architecture

After complex investigation, we found out that this limitation is caused by AWS limit “Maximum inbound unacknowledged QoS 1 publish requests” specified here and the architecture of flespi streams. The flespi stream must be sure that the message will be delivered. So the stream has its own buffer to store undelivered messages. And the message is removed from the buffer only when at least one message from the batch is acknowledged by the destination. In case of an HTTP stream, for example, it is response code 200, in case of Wialon-retranslator, it is a special confirmation packet with the number of received messages. AWS IoT stream works over MQTT, so the next batch of messages is sent once all of the previously published messages receives PUBACK. And this amount is limited to the above-mentioned 100 messages.

Is there a native way to inject flespi messages into the AWS SQS queue?

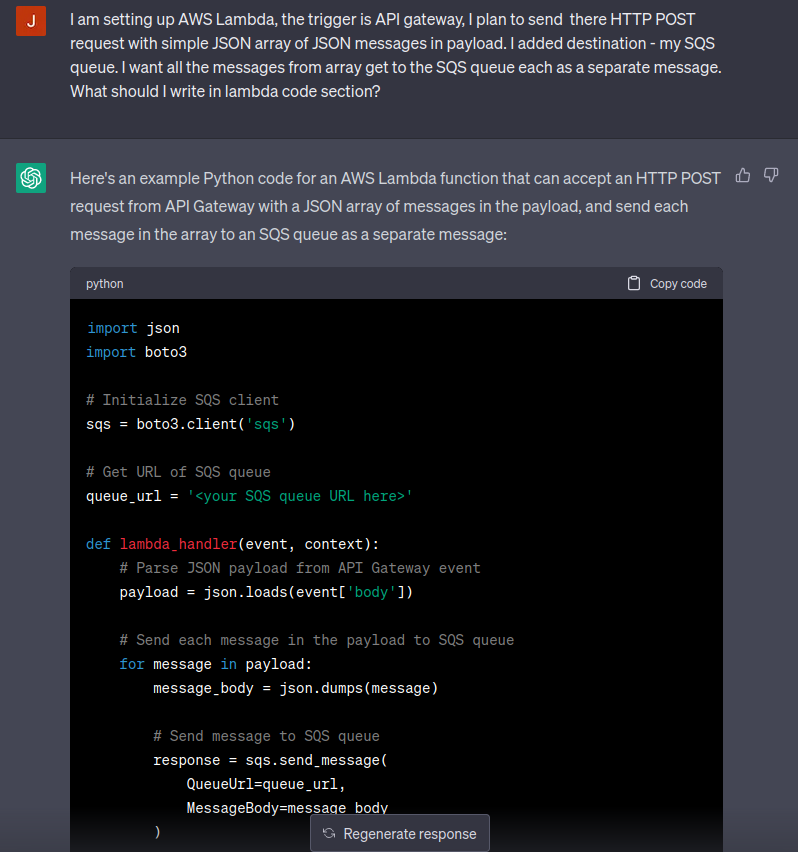

We got this question from one of our customers. My first intention was to answer something like “Yes, insert data to AWS IoT hub and set up a trigger to send messages to SQS”. But I decided to investigate another possibility: how to do it using an HTTP stream? So I created an SQS queue (simple procedure accomplished in several mouse clicks). Then I created an AWS Lambda function with the python code and AWS SQS queue as destination (this adds permissions for the code to inject data to SQS). Then I asked ChatGPT:

The code looked valid, so I inserted it into Lambda’s code editor and deployed the function.

Debugging the code and my thoughts about ChatGPT

The next step is to configure the test, so I inserted a sample message from flespi, and ran the test… which FAILED! “Yeah, the machine still can’t beat the human 😏” I thought dismissively, found the reason for the problem, and smugly changed a line of code. I was so happy that I as a developer is still valuable, and this complex AI still can’t write a simple 20-line working piece of code and the years studying mathematics and computer science had not been a waste of time.

Next I created an HTTP stream specifying the Lambda’s URI as a target, assigned a device to it, and POSTed several thousands messages to it to simulate high load… and the stream FAILED! I started to investigate what happened and figured out that I wrote the test incorrectly, so it's my mistake, and ChatGPT wrote the correct working code from the beginning. It was an overwhelming and contrasting feeling 😭: this transformer predicting the next word did the job better than me, without mistakes. And I, so experienced and educated, made what all the humans do: mistakes.

Tests and optimization

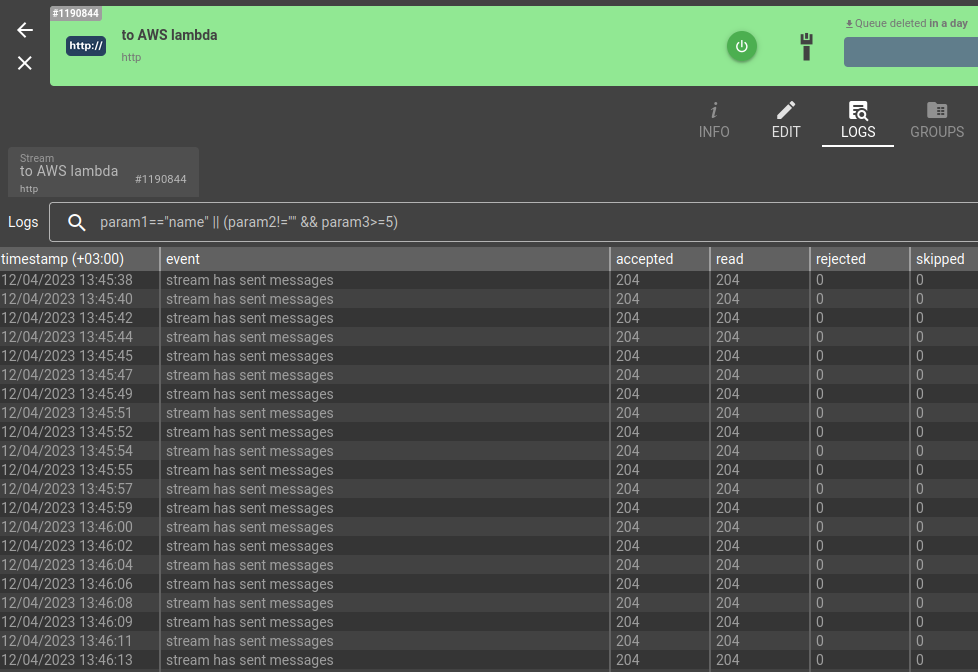

After I verified that the data flow works (for sure, I asked ChatGPT to consume messages from the SQS queue and print it to the terminal to loop down the dataflow), it's time to do the benchmarks. What I saw after enabling the stream:

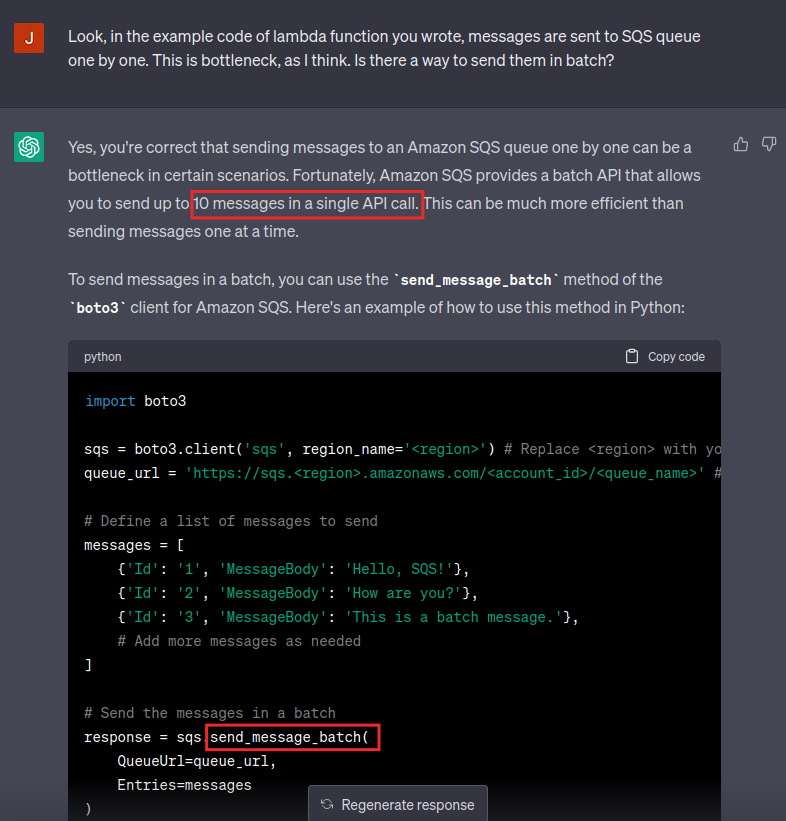

200 messages per 2 seconds is exactly the speed of aws-iot stream. There must be ways to optimize it. I looked through the code once again and noticed that messages are sent to SQS one by one via the sqs.send_message function. I asked ChatGPT:

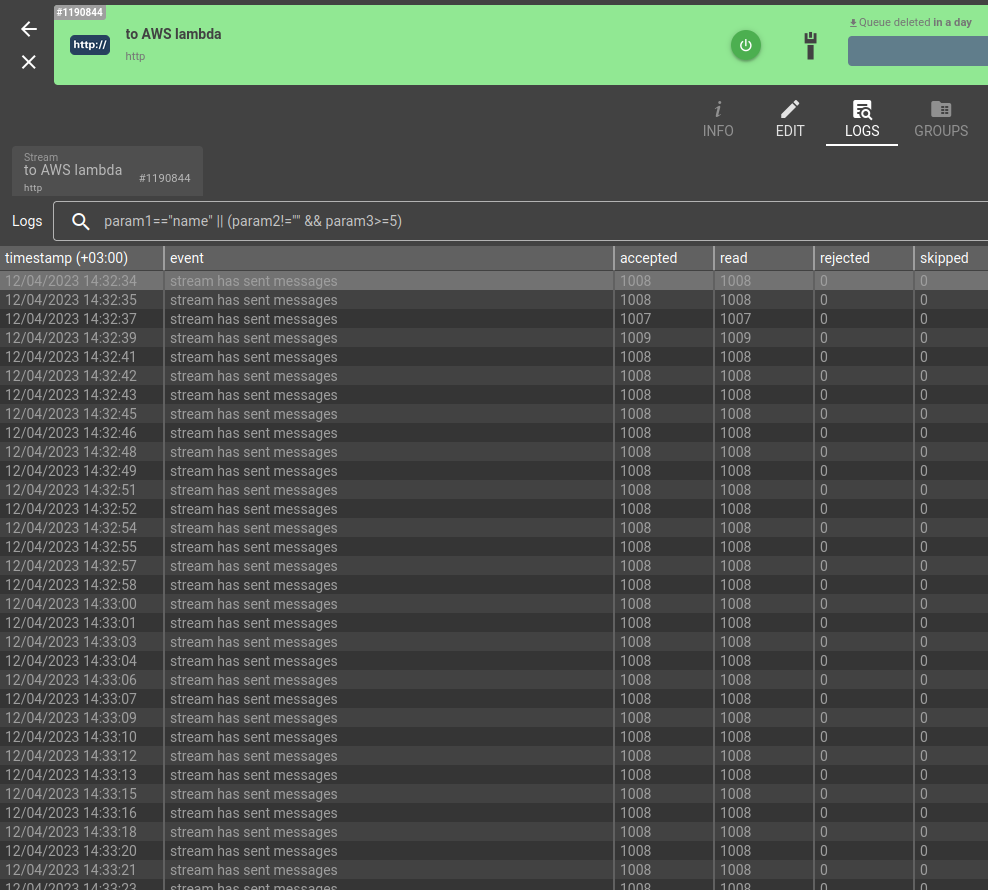

This was exactly what I needed. Thus, I asked ChatGPT to modify the code that accepts more than 10 messages as event JSON, split it, and send in batches. Which ChatGPT perfectly did without mistakes. I benchmarked the solution:

~42K messages per minute! 7 times faster than the aws-iot stream. That’s the result I liked.

The final version of the Python code for your reference is published here.

***

I intentionally used the simplest possible AWS configuration here: the data is posted directly to the AWS Lambda’s URL, without usage of AWS API gateway (which can be added as a trigger for the Lambda function). Actually, it’s a good idea to use it because with the API gateway you can configure authorization (via some static HTTP header) and whitelist flespi IP range to accept requests only from the flespi platform.

I think I'll go and ask ChatGPT how to do it 😄