For us, living in a northern country, June, July, and August are mostly off-season months filled with vacations for our team members. Despite this, we've accomplished quite a lot of interesting things that you can now try.

But as usual, let me start with our monthly uptime. In June, flespi achieved 99.9838% uptime rate, with several outages caused by network connectivity issues from external parties. At the beginning of the month, there was kind of global issue in the Telia network that affected our connections from AWS. And in the middle of the month, there were two network cuts lasting 194 and 208 seconds respectively. These cuts occurred due to maintenance of routers in the Arelion (Telia) network. During the maintenance, there was a failure to gracefully close BGP connections, which led other peering parties to detect the issue upon timeout (180 seconds in our case), recalculate, and announce new BGP routing paths to restore stable traffic flow. Unfortunately, this was something we could not directly influence as the path was broken towards us (for incoming routing). Nevertheless, we have defined a set of actions to improve the situation, and perhaps they will work better next time.

In June, our team focused on several major tracks outlined in our 2024 roadmap.

We contributed ample time to enhancing our video integration with major supported manufacturers. In some cases, it appeared that the device manufacturers were improving their firmware in tandem with us. Despite video functionality having been released and marketed for years, it remains a work in progress on many devices. This presents a promising opportunity for those integrating video into their platforms. Currently, the market is still young, wild, and growing steadily, offering a prime chance to capture your share of it.

We offered AI consulting services for devices from major device manufacturers based on their documentation. We're expanding this service to cover more and more companies and specific models. Essentially, you can now query the AI instead of contacting device manufacturer support to receive instant answers to your inquiries. Surely, the quality of the answers depends on the documentation in our knowledge base. Nonetheless, it remains a valuable resource, and we invite our users to engage this AI-backed manual consultation service in daily support team operations.

In our internal support process, codi - AI-based assistant - took the lead in June. We invested a lot of time in adjusting its knowledge, tools, LLM model selection, and instruction set. Around mid-June, we noticed a significant shift in user behavior, with the majority preferring to interact with codi rather than human supporters. Once we identified this fundamental change, we gave codi the green light and enabled its automatic activation to be the first to respond in chat.

It was a harsh week as we worked to improve and cover all our processes, but our efforts paid off. Currently, the human role in flespi support resembles the pilot's function in modern airliners: guiding the autopilot (codi), making adjustments, guiding its path, monitoring answer quality, and assisting in complex cases. The heavy lifting in support is now handled by codi. Moreover, it's even creating tasks for humans now. If I had known earlier that we were building AI that creates tasks for humans, would we have built it at all? 😄

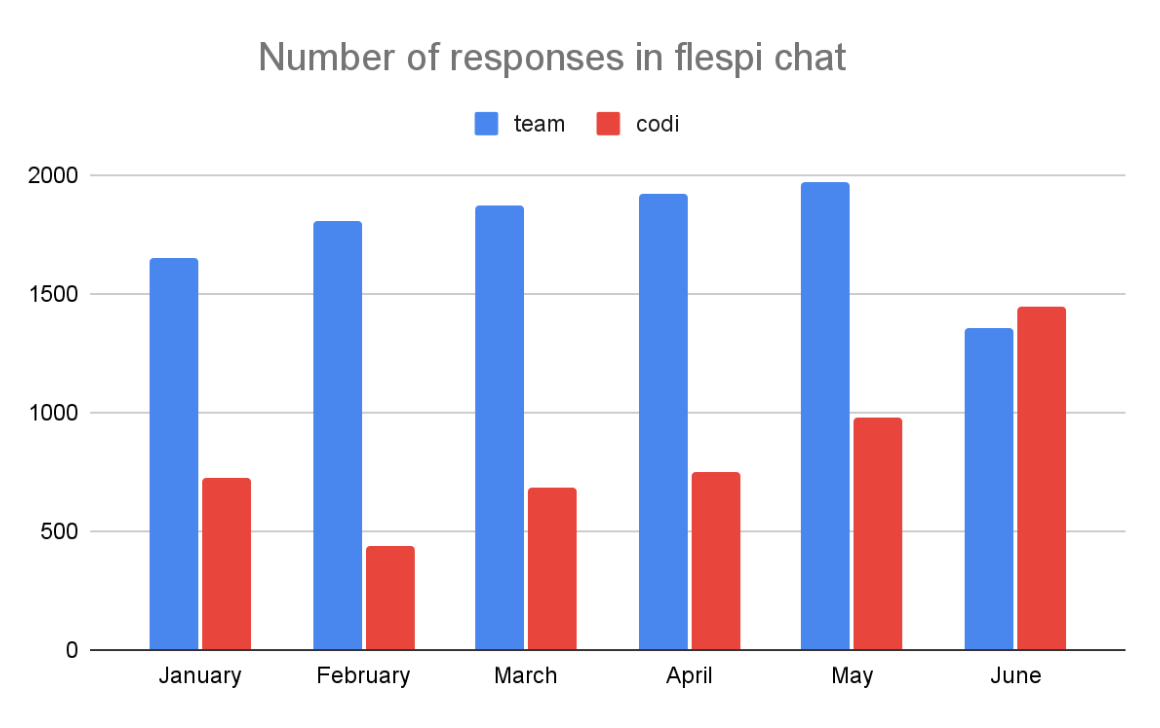

To illustrate codi's performance in our chat and its impact on our team, let me show you this simple comparison of monthly responses between our team (humans) and codi:

As you can see, in June, codi outperformed the entire flespi team in support process work. Even more importantly, we've significantly reduced the support load on our team, which aligns perfectly with our goals. It appears we are succeeding.

Another track on our roadmap is the Tacho functionality. We've designed the architecture for the tacho solution and are currently developing it. In short, there will be two layers available for using in your system:

The low-end layer allows for seamless integration of tacho-card authorization into your own platform, which communicates directly with telematics devices. This layer provides a few simple methods to initiate and terminate authorization sessions on the tachograph card, and to relay traffic to the card reader for decryption. It is suitable for platforms that do not communicate with devices via flespi, yet require integration of company card authorization mechanics essential for any tachograph.

The high-end layer will internally leverage the low-end layer to seamlessly deliver tacho functionality for flespi protocols. To activate tacho features such as downloading .ddd files in this layer, you simply need to configure channels and card devices that will operate within the low-end layer. We believe this setup should be quite straightforward.

We are also going to release an open-source company card client application for multiple platforms that you can adapt to your needs. Additionally, we are looking into integrating tacho card-hotels hardware for the mass management of company cards.

One feature comes not from our roadmap, but from our experience. We've released Recycle Bin, which gives you a second chance on accidental deletion of various flespi items, including channels (with URI restore functionality) and devices (with message storage restore functionality). Let it be an additional safety net for your flespi experience!

That’s it. Not to mention hundreds of protocol updates, new device model integrations, and minor improvements. This is our daily routine. Maybe one day, codi will even help us develop protocols. But what will be left for us humans in that scenario? ;)