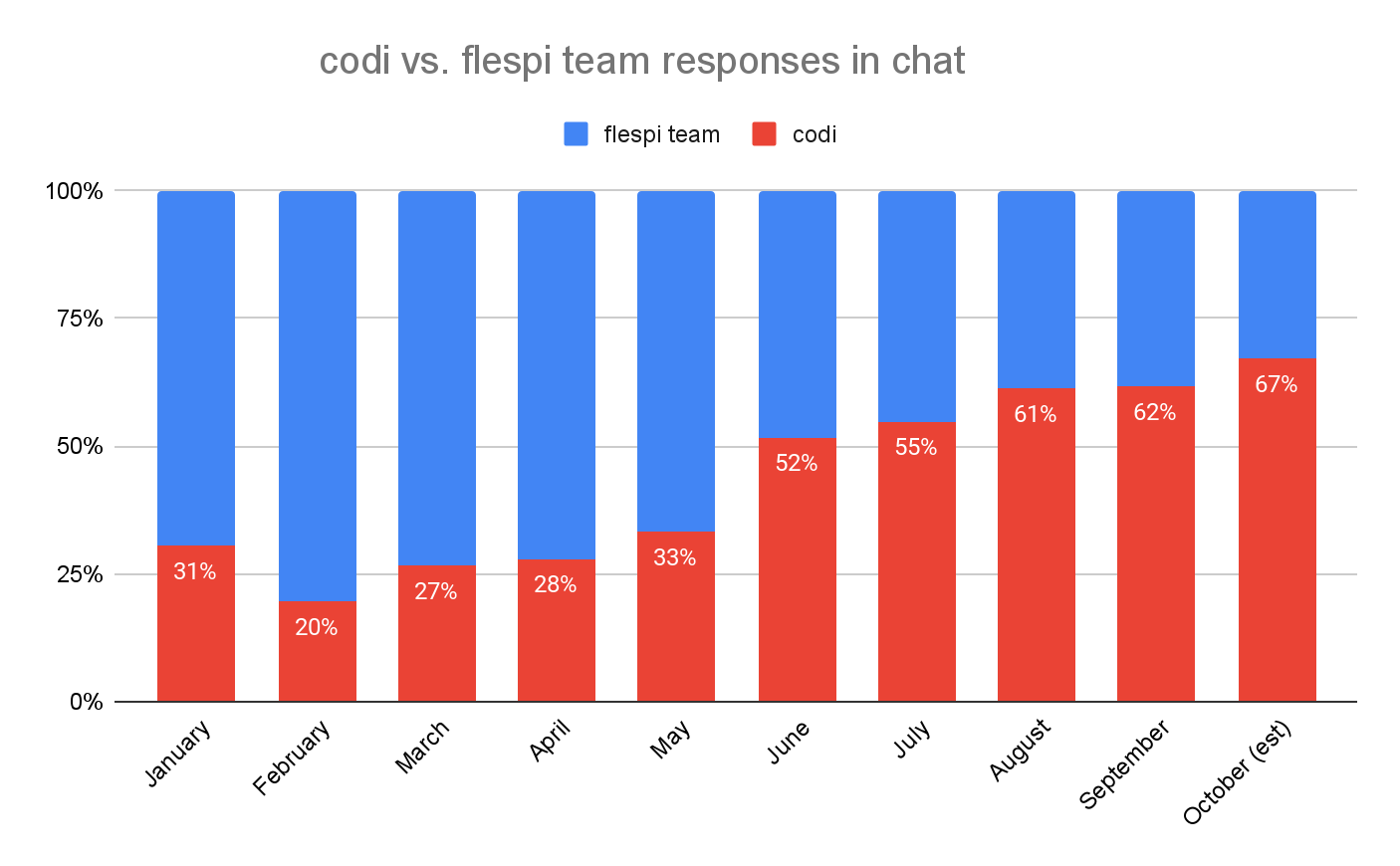

This year has definitely seen the rise of genAI assistance services in flespi. Just look at the chart below to see how codi, our AI assistant, is taking over tasks that were previously handled by humans:

This isn’t necessarily bad. Human engineers who used to manage support communication with flespi users are now delegating most routine communication activities to the AI. Instead, they focus on developing the AI platform and preparing the knowledge content. And there are still plenty of tasks where humans are much better.

In July, I already discussed the reasons behind the summer jump on this chart and explained the details of the 3rd generation architecture that powers our AI assistant. Since then, not much has changed. We’re constantly improving the platform’s components: knowledge, tools, and instructions.

Our feedback loop and codi’s answer evaluations are not yet automated. I personally read the majority of communication with the AI and evaluate the quality of its responses. If I find an obvious mistake, I expand the model’s output and evaluate its reasoning, and then fix something on our AI platform. In 90% of cases, the issue lies in the knowledge — the content of our KB, blog, or API. Improving it is as simple as editing the corresponding article and updating the information. Fortunately, the number of corrections we apply has become minimal — just a few times a week now.

Recently, I’ve encountered cases where codi’s response wasn’t ideal, but there was nothing to correct. This usually happens because the issue lies in the question itself. There’s not much I can do about that, except to share this experience and offer some guidance. These points aren’t just about codi; they apply to many modern AI support agents. So here’s my list of common mistakes people make when interacting with AI support agents.

1. Not reading the AI-generated response thoroughly

We’ve enhanced the structure of AI responses on purpose. We want сodi to not only generate answers but also explain its reasoning — like a partner providing background information to build trust in its logic. We believe reasoning is essential to foster confidence in AI-generated responses.

So, the correct answer is often in the response, but it might be surrounded by headers, footers, and additional information. When users see a few paragraphs instead of a single, definitive sentence, they sometimes ignore the answer and keep asking the same question. This is counterproductive with AI, unfortunately.

Tip: please always read the answer carefully before asking a follow-up question.

2. Underestimating the capabilities of AI

AI support agents are simple, consisting of a base LLM, instructions, knowledge (RAG), and tools. Codi is no exception — it’s packed with tools that give it powerful capabilities.

For example, to handle network-related issues, it can perform traceroutes and pings from flespi to third-party IPs, resolve domain names using our name server infrastructure, and access information from the flespi NOC, including real-time data on latency and uptime across global NOC nodes.

Compare this to a human engineer reacting to your urgent issue when something isn’t working, and you’re unsure whether it’s a global problem (but flespi NOC remains quiet), a local issue in your system, or something in between.

What I’ve noticed is that people often hesitate to rely on AI in urgent situations, likely because the problem feels critical, and they don’t want to “waste time” on AI. This happens because people aren’t yet accustomed to AI’s capabilities.

But in 2024, AI support agents equipped with the right tools can diagnose urgent local issues faster than humans, who often need more time. Its abilities are improving rapidly, and for many problems, it can already provide a good resolution.

Tip: give AI a chance, even in critical situations.

3. Not providing an item's identifier

This is related to the previous point but is a distinct issue. Many users still think of AI as a live documentation assistant. Instead of providing the ID of a device, plugin, webhook, or calculator, they copy its configuration or part of the log/message into the chat, seeking advice.

There’s no need to copy configurations into the chat anymore. AI can access your items directly. With the ID, Codi can also access the item's latest logs, messages, and possibly related data from your account.

Tip: mention the item’s ID and type in your question once to provide the correct context for all further messages.

4. Wanting too much info in a single response

Our AI platform architecture supplies the LLM with tools and contextual knowledge related to the problem. There are limits to how much information we can feed the model at once. We need to give it enough to be smart, but we also have to respect the LLM’s context window (the maximum data it can process in one request) to avoid overload and keep it focused.

In chats, I’ve seen people asking the model to combine several flespi subsystems, expecting it to provide all the configurations and even server-side code in a single response. It won’t. While we may overcome this limitation in the future, for now, address issues one by one to get the best AI-generated responses.

Tip: for complex questions, it’s better to move step by step with the AI, focusing on one aspect at a time.

5. Not accepting ‘no’ from AI

This is a big issue. Imagine you ask codi a question it can’t solve. LLMs are designed to provide answers and overcome limitations, so we’ve had to specifically instruct it to respond “Not possible” as a safe exit when there’s no solution. But humans naturally often struggle to accept 'no' from AI. Many times, when codi says "Not possible," users turn off AI and ask the same question to a human.

Tip: trust the AI. If it says 'no', it means there’s truly nothing else that can be done.

6. Misguiding the AI in the wrong way

Here’s a sample situation of what happens when you don’t accept ‘no’ from codi while pushing it to find an alternative or asking to assist you in the wrong direction.

Codi will try its best to help, even if that path is off track—it’s just doing its job. But sometimes, it can make things worse.

The root of this issue lies in the RAG — we load the LLM context with info tied to your question, and codi builds from there. For example, you ask codi to detect when a vehicle door is opened or closed. It suggests using either a calculator or a plugin. You pick the plugin ‘track’, then ask codi how to handle an event when the door stays open for 3 minutes. And here's the problem.

Plugins can only log the event when the state changes. But if you need to track a state over time, the only reliable option is a calculator. Since your initial question was plugin-focused and codi is deep into plugin knowledge, it'll go all out, generating some PVM code that might work... but probably won't. This happens because the AI context has already been skewed by the path you set it on. The fix?.. Try the trick that often helps: when the AI’s solution feels overly complicated, make it reconsider the answer.

Tip: ask codi to reset and suggest a fresh setup for your solution, or to explore alternative approaches.

I believe that soon we’ll overcome these limitations described and prevent human/AI communication from veering off track. But to get the most out of AI today, consider applying these strategies to your interactions and it will make your experience with flespi even more productive.